Where can I find the full-featured version of DeepSeek-R1?

Someone has compiled a table listing websites that currently offer the full-featured version of DeepSeek-R1. Instead of reinventing the wheel, I'll directly share the link here.

https://ccnk05wgo092.feishu.cn/wiki/WeGmwNVgLi9SFtkfwnacu6H5nfd?table=tblfZlmGJHoAYrQe&view=vewv6xDDG2

How to deploy DeepSeek-R1 locally?

First, let me clarify that since most people lack the capability and resources to deploy the full-featured version of DeepSeek-R1 locally, we will focus on deploying the distilled smaller models by default.

Install ollama: This is straightforward. Simply download the software for your platform from the official website (https://ollama.com/download) and install it. If you're a Linux user, you can also use the following command to install it.

curl -fsSL https://ollama.com/install.sh | shRun the model: Open a terminal and run

ollama run deepseek-r1:7b. Ollama will automatically download the relevant model files.Of course, you can also choose different models based on your needs:

Model Name Run Command DeepSeek-R1-Distill-Qwen-1.5B ollama run deepseek-r1:1.5b DeepSeek-R1-Distill-Qwen-7B ollama run deepseek-r1:7b DeepSeek-R1-Distill-Llama-8B ollama run deepseek-r1:8b DeepSeek-R1-Distill-Qwen-14B ollama run deepseek-r1:14b DeepSeek-R1-Distill-Qwen-32B ollama run deepseek-r1:32b DeepSeek-R1-Distill-Llama-70B ollama run deepseek-r1:70b By default, ollama downloads models from GitHub, so users in China may experience slow download speeds or even failures. In such cases, you can use the accelerated mirror provided by the domestic Moda community:

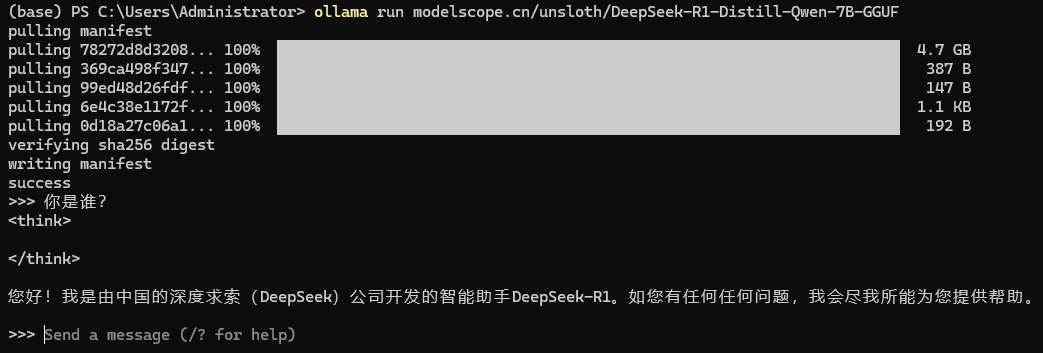

Model Name Run Command DeepSeek-R1-Distill-Qwen-1.5B ollama run modelscope.cn/unsloth/DeepSeek-R1-Distill-Qwen-1.5B-GGUF DeepSeek-R1-Distill-Qwen-7B ollama run modelscope.cn/unsloth/DeepSeek-R1-Distill-Qwen-7B-GGUF DeepSeek-R1-Distill-Llama-8B ollama run modelscope.cn/unsloth/DeepSeek-R1-Distill-Llama-8B-GGUF DeepSeek-R1-Distill-Qwen-14B ollama run modelscope.cn/unsloth/DeepSeek-R1-Distill-Qwen-14B-GGUF DeepSeek-R1-Distill-Qwen-32B ollama run modelscope.cn/unsloth/DeepSeek-R1-Distill-Qwen-32B-GGUF DeepSeek-R1-Distill-Llama-70B ollama run modelscope.cn/unsloth/DeepSeek-R1-Distill-Llama-70B-GGUF For example, to run the 7B model, execute the following command:

ollama run modelscope.cn/unsloth/DeepSeek-R1-Distill-Qwen-7B-GGUFOnce the model is running successfully, you can start interacting with it directly:

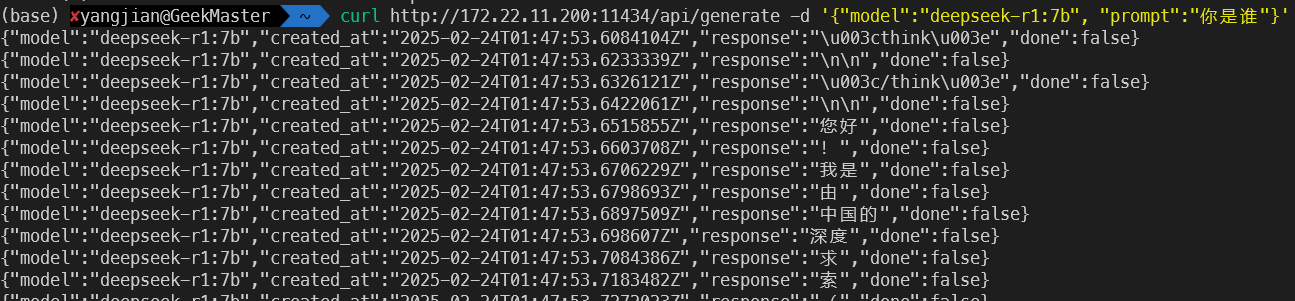

If you want to use the API to call the local model, refer to the following commands:

# Default ollama model curl http://localhost:11434/api/generate -d '{"model":"deepseek-r1:7b", "prompt":"Who are you"}' # If using the accelerated mirror, the model name should be: modelscope.cn/unsloth/DeepSeek-R1-Distill-Qwen-7B-GGUF:latest curl http://localhost:11434/api/generate -d '{"model":"modelscope.cn/unsloth/DeepSeek-R1-Distill-Qwen-7B-GGUF:latest", "prompt":"Who are you"}'

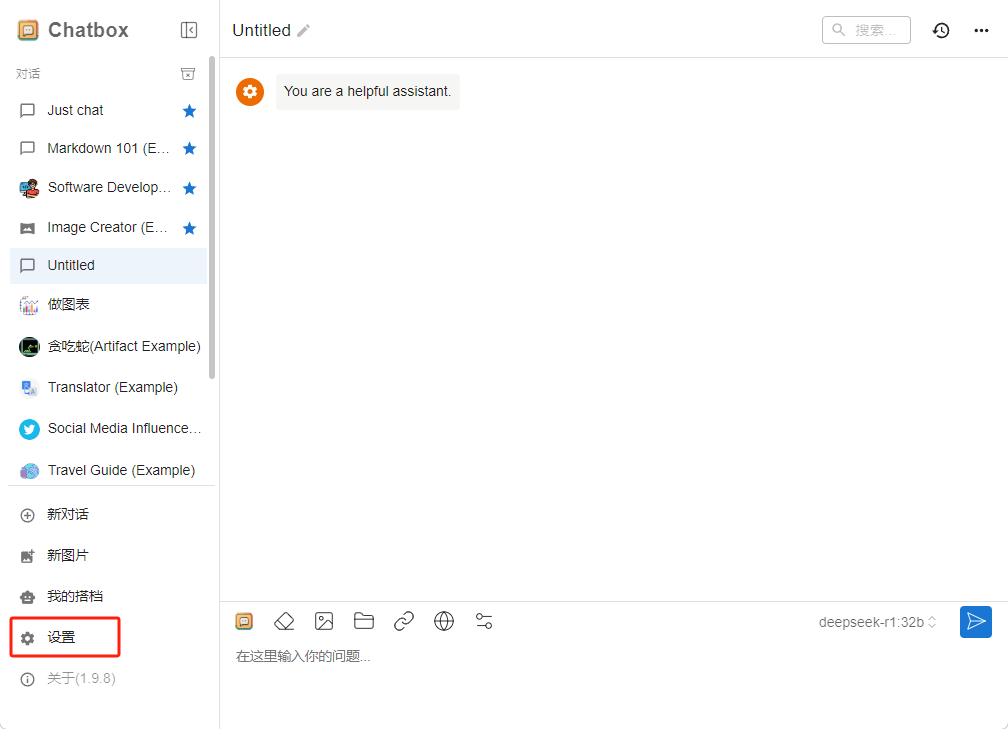

The terminal experience for conversations isn't ideal, so I recommend using the ChatBox client. Simply download the client for your platform from the download page (https://chatboxai.app/en#download) and install it.

Open the ChatBox client and click the "Settings" menu in the bottom-left corner:

In the settings window that pops up, select the "Model" tab, then choose the "Ollama" model and enter the Ollama API service address (default is http://127.0.0.1:11434):

Now you can interact with the local model in ChatBox:

Tips for Communicating with DeepSeek

DeepSeek has strong reasoning capabilities, so you don't need to use complex prompt engineering—just communicate with it in plain language. In fact, the intelligence of major AI models has improved significantly. As long as you can clearly describe your needs, you'll receive good feedback. Following these principles during your interactions with AI can yield even better results:

General Formula

- Role: "You are a senior marketing expert."

- Task: "Write a Xiaohongshu (Little Red Book) promotional copy for a new lipstick."

- Context: "Targeting working women aged 25-35, creating an advanced caramel-toned atmosphere."

- Requirements: "Include emojis, list 5 reasons to recommend the product in the main text, and keep it under 500 words."

1. Be Specific, Not Vague

Principle: Questions should be clear and focused, avoiding broad or open-ended queries. Example:

- Incorrect: "How to make money?" →

- Correct: "How can I earn over 5,000 RMB per month through freelance writing?"

2. Provide Goals, Not Steps

- Principle: Don't give too many specific steps, as this limits the AI's creativity. In short: Tell me what to do, but don't 'teach me how to do it.'

- Example:

- Incorrect: "Generate a research report on xxx. First, search for materials online, then outline..." →

- Correct: "Generate a research report on xxx, leveraging your professional expertise. Avoid clichés, and ensure all conclusions are backed by solid evidence."

3. Provide Background Information

- Principle: When dealing with specialized fields or personalized scenarios, provide background information. Don't make the AI waste computational power guessing.

- Example:

- "I'm a primary school student. Can you explain what quantum entanglement is?" →

- "I'm a beginner in programming. Can you explain recursive functions with a Python example?"

4. Assign a Role to the AI

- Principle: Assigning a role to the AI can make it appear more professional.

- Example:

- "You are a computer expert. Please recommend a computer configuration based on the following requirements: Purpose: daily office work, software programming, gaming, and watching movies; Budget: 10,000-15,000 RMB."

- "You are a seasoned marathon coach, skilled at creating training plans for runners of different levels."

- "Pretend you're Elon Musk. Using first-principles thinking, analyze why the small team behind DeepSeek has achieved such significant success in the AI field."

5. Ask Follow-Up Questions Promptly

- Principle: Take full advantage of the AI's strength—it will answer any question without getting annoyed.

- Example: "You mentioned the xxxx theory earlier, but I didn't understand. Can you explain it in more detail?" I love this phrasing. You can start with any question, then pick something interesting from the AI's response and ask it to elaborate. After doing this 10 times, you'll find that the AI has opened doors to knowledge you've never encountered before.

6. Hit a Wall? Turn Around Immediately

This doesn't mean you should abandon the AI if it doesn't work well right away. Instead, if you're unsatisfied with the AI's responses after several attempts, try optimizing your prompts, changing the angle or format, or adding more details. Don't just keep clicking the "Regenerate" button repeatedly.

7. Use Meta-Prompts

- Principle: Ask the AI directly how to communicate with AI, or let the AI ask you questions to help clarify your thoughts.

- Example:

- "I'm new to AI. Can you give me 10 principles for communicating effectively with AI?"

- "If I want the AI to help me create a high-quality research report, how should I phrase my question?"

- "I want to develop an AI application to help my company reduce costs and improve efficiency, but I'm not sure where to start. Please ask me 5 key questions to guide my thinking and ultimately find a product direction."

8. Break Down Complex Tasks Step by Step

- Principle: Divide complex problems into smaller steps and solve them one by one. If you ask many questions at once, the AI will only give you a summarized answer.

- Example: If you want the AI to analyze the impact of global climate change, first ask about the causes, then analyze environmental, economic, and other dimensions separately.

9. Provide Correct Examples

- Principle: If you find that repeatedly adjusting your prompts still doesn't yield the desired results, try "showing the AI how it's done."

- Example: For instance, I often ask the AI to design AI workflows for me, which I then import directly into Dify for use. I might phrase it like this: "Please output the workflow you just designed in YAML format. Here is the correct YAML file format for workflows: xxxxxx"

10. Speak Naturally, Don't Show Off

- Principle: Avoid overly complex vocabulary (jargon, abbreviations, technical terms, etc.). Use plain language unless you're specifically asking about the meaning of a technical term.

- Example:

- Incorrect: "How to perform FT on a large model?" →

- Correct: "How do I fine-tune a large model? Please provide detailed steps and the tools required."

11. Maintain Continuity in Multi-Turn Conversations

- Principle: When continuing a previous conversation, reference key points to avoid repetition.

- Example:

- Incorrect: "What are the risks of the next execution plan?" →

- Correct: "Based on the marketing strategy mentioned earlier, what are the risks of the next execution plan?"

12. Try Different Phrasings to Overcome Limitations

- Principle: Ask the AI to answer the same question in different ways.

- Example:

- "Can you explain the training process of DeepSeek-R1 using a flowchart?"

- "Can you compare the advantages of DeepSeek-R1 over OpenAI's GPT-4 in a table?"

13. Validate with Practice and Provide Feedback

- Principle: During conversations with AI, timely feedback is crucial. If the AI's response meets your expectations, acknowledge and thank it. If the response is unsatisfactory, provide specific feedback to guide the AI toward improvement.

- Example:

- "After following your study method, my grades improved by 10% in two weeks. What should I do next to consolidate this progress?"

- "Great, you've fully understood this article. Now, based on the methods mentioned, generate an article titled 'How to Improve AI Writing Skills.'"

- "The data you provided earlier contradicts the 2023 statistics. The latest report shows XX should be XX."

14. Treat the AI as an Equal Conversational Partner

- Principle: Pose open-ended questions to engage the AI in deeper thinking.

- Example: "Some say AI is becoming more human-like, while humans are becoming more AI-like. What do you think?"

15. Combine Multiple Tools

Remember, our goal is to solve problems. If one AI tool doesn't work, try another. Often, the best approach is to leverage the strengths of multiple AI tools in combination. For example, when writing:

- Use Kimi for processing long-form content.

- Generate images with Midjourney.

- Polish the text and correct typos with DeepSeek.

- If you need to turn the article into a presentation, import it into Gamma to create a PowerPoint.